The generalization of neural networks can often be improved by using label smoothing which is soft targets that are a weighted average of the hard targets and the uniform distribution over labels. We introduce a new variation of label smoothing that improve the performance of sequence-based models such as BERT, ELMo

Introduction:

Neural Networks (NNs) have proved their efficiency in solving classification problems in areas. Szegedy et al. introduced label smoothing, which improves accuracy by computing cross entropy not with the ‘hard’ targets from the dataset, but with a weighted mixture of these targets with the uniform distribution. Label smoothing has been used successfully to improve the accuracy of deep learning models in many tasks, such as image classification, speech recognition, and machine translation.

The goal of Label smoothing is to prevent the largest logit from becoming much larger than all others, thus encouraging uncertainty in the labels of a dataset. This uncertainty helps to tackle the over-fitting issue, and thus LS can be an efficient method to address the adversarial attack phenomenon.

Hinton et al. introduced a knowledge distillation approach, which improves accuracy by training a few big models (teachers), then training a student model with a mixture of the targets with the teacher ensemble output. Knowledge distillation has been used successfully to improve the accuracy of deep learning models and in distilling knowledge from large models to smaller models.

Knowledge distillation goal is to enrich the limited knowledge present in the sparse targets by including the knowledge from the ensemble models’ output. KD works because most of the knowledge in the learned ensemble is in the relative probabilities of extremely improbable wrong answers. For example, the ensemble may give a BMW a probability of one in a billion of being a garbage truck but this is still far greater (in the log domain) than its probability of being a carrot.

Gaussian Label Smoothing:

The inspiration of GLS came from the realization that in sequence tagging problems (such as named entity recognition, question answering and Semantic Role labeling) the relative probability of two consecutive tokens labels is related. Unlike typical classification problems where two consecutive labels are not related. In languages consecutive tokens are related.

For example, Given this question ‘When did Beyonce start becoming popular’ and the context ‘Beyoncé was born and raised in Houston, Texas, she performed in various singing and dancing competitions as a child, and rose to fame in the late 1990s as lead singer of R&B girl-group Destiny’s Child’ the correct answer is ’late 1990s’ but the probability of the span ‘in the late 1990s’ being an answer should be higher than any other random token span.

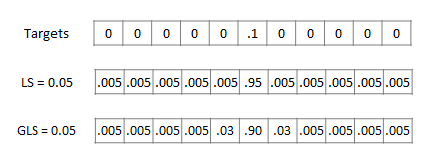

GLS takes into account the fact that sequence of tokens is related and instead of the uniform distribution over the label that label smoothing proposes we adjust it to also include gaussian distribution around the correct token. See figure 1

Figure 1 - 1st, the original target labels, 2nd Label Smoothing with smoothing set to 0.05, 3rd is Gaussian Label Smoothing with smoothing set to 0.05

The relationship to Knowledge Distillation

We predicted that the error of the ensemble of large models that trained and regularized well will tend to be missing the correct token by one or a few tokens. That is why in general the F1 score of the SQUAD problem is higher than the exact match.

After careful analysis of model errors, it turns out that this assumption is true. A small mistake in the boundary is one of the big types of errors. Thus, Gaussian Label Smoothing bears some of the knowledge distillation characteristic in the case of sequence tagging where the sequence tokens are related.

Experiments

We experimented with BERT Base on named entity recognition and BERT large on questions answering SQUAD 2.0.

We compared our standard implementation with a label smoothing and a gaussian label smoothing variance. After reproducing the paper results. All hyperparameters were fixed.

| System | Dev F1 | Test F1 |

| BERT BASE (paper) | 96.4 | 92.4 |

| BERT BASE (our-implementation) | 96.4 | 92.4 |

| BERT BASE + LS | 96.4 | 92.4 |

| BERT BASE + GLS | 96.5 | 92.6 |

| CoNLL-2003 Named Entity Recognition results | ||

For named entity recognition we used the CoNLL-2003 dataset.

For SQUAD 2.0, we trained the model for 3 epochs, while decreasing the learning rate at the start of each epoch. We used Adam optimizer with 200 warm-up steps where the larging rate ramped up to the desired value. Table 2 contains the results.

Update Nov 2019

We applied LB and GLS to ALBERT XLARGE model on SQUAD 2.0 dataset. Table 3 shows the results.

| System | Dev F1 | Dev EM |

| BERT LARGE (our-implementation) | 84.1 | 81.0 |

| BERT LARGE + LS | 84.2 | 81.1 |

| BERT LARGE + GLS | 84.9 | 81.4 |

| BERT Large SQUAD 2.0 Dev results | ||

| System | Dev F1 | Dev EM |

| ALBERT XLARGE (our-implementation) | 87.9 | 84.1 |

| ALBERT XLARGE + LS | 88.05 | 84.5 |

| ALBERT XLARGE + GLS | 88.21 | 85.2 |

| ALBERT XLarge SQUAD 2.0 Dev results | ||

Conclusion

Label smoothing is used to train many state-of-the-art models. In this work, we proposed a variant that is well suited for sequence tagging problems by taking into account the nature. We performed experiments to evaluate and compare the new variant with the standard label smoothing.